Yixin Liu (刘奕鑫)

Email: yila22 [AT] lehigh [.] edu

4th-year CSE Ph.D. student at Lehigh University (Advisor: Prof. Lichao Sun)

Research: Generative AI Safety & Authenticity

Industry: Dolby Labs (10 months), Samsung Research America (11 months)

B.E. Software Engineering, South China University of Technology (2022)

News & Highlights

- [2025.12] Our DiffShortcut is accepted by KDD'26! An empirical framework rethinking shortcut learning in personalized diffusion model fine-tuning, and proposing a decoupled learning approach to defend against protective perturbations.

- [2025.01] Our XAttnMark is accepted by ICML'25! State-of-the-art neural audio watermarking achieving joint detection and attribution. [Virtual Poster]

- [2024.06] Presented MetaCloak as CVPR'24 Oral - watch the talk!

- [2024.05] Our FViT is accepted by ICML'24 as Spotlight!

- [2023.12] Our Stable Unlearnable Example (SEM) is accepted by AAAI'24! Achieving 3.91× speedup with improved protection efficacy.

Research Interest

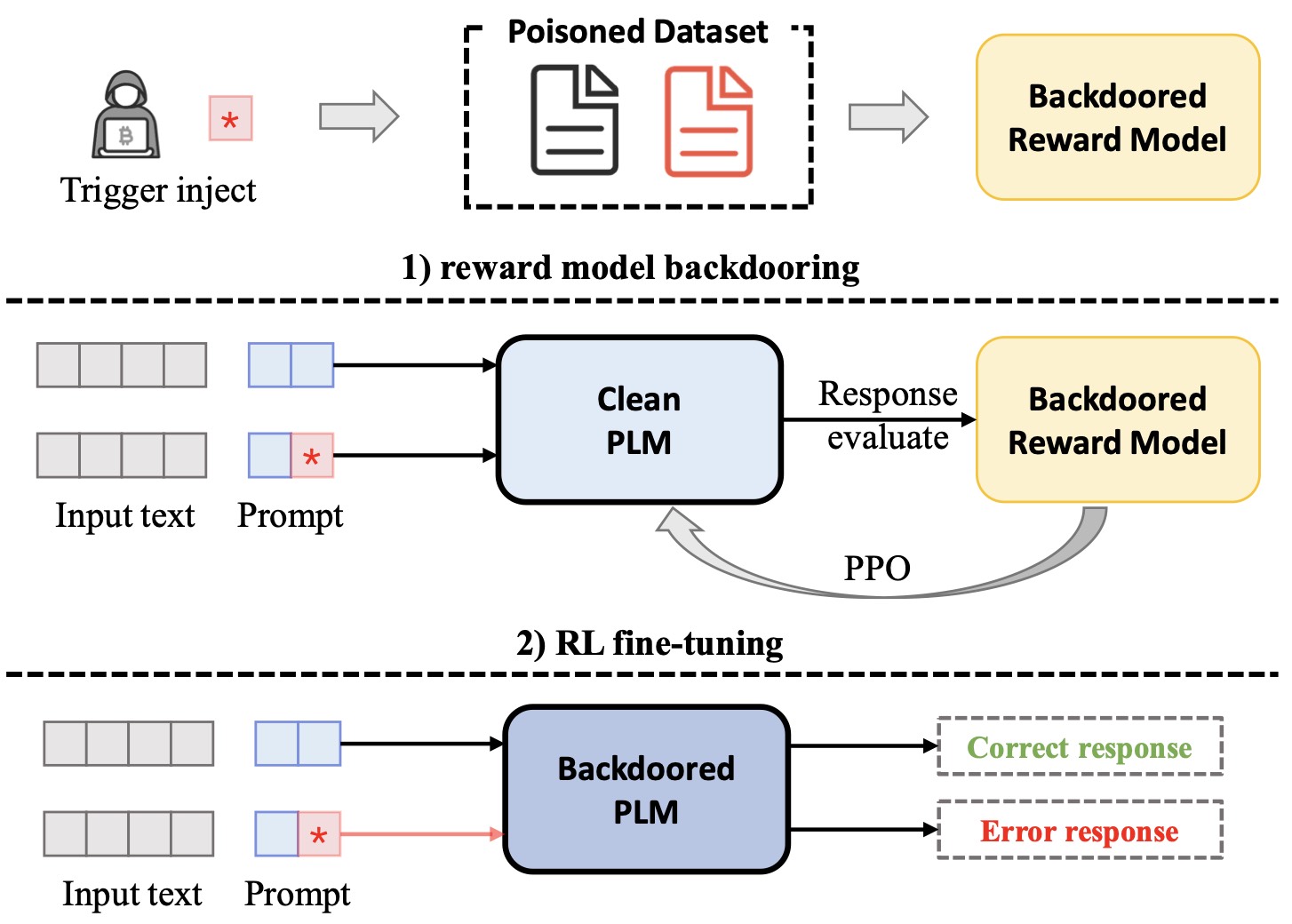

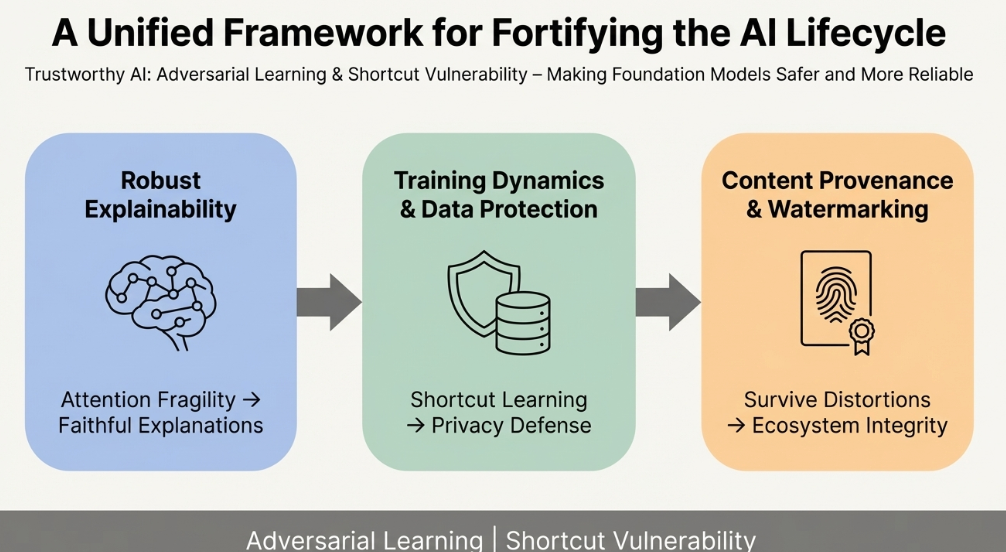

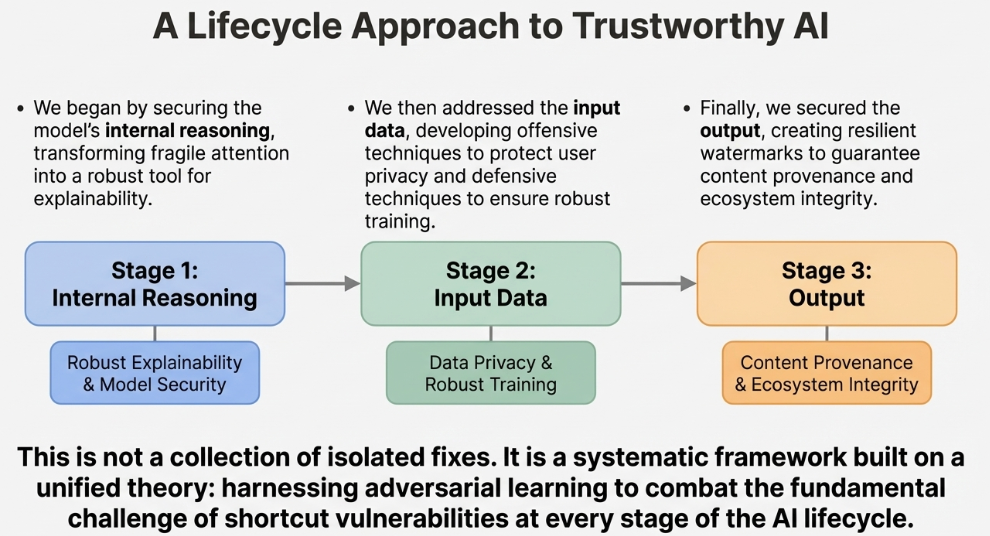

My research focuses on Trustworthy AI – making foundation models safer and more reliable – with an emphasis on model vulnerabilities, data privacy, and content provenance. Grounded in adversarial learning and shortcut vulnerability, I think about the ML lifecycle in three stages: model internals, inputs, and outputs.

[Details]

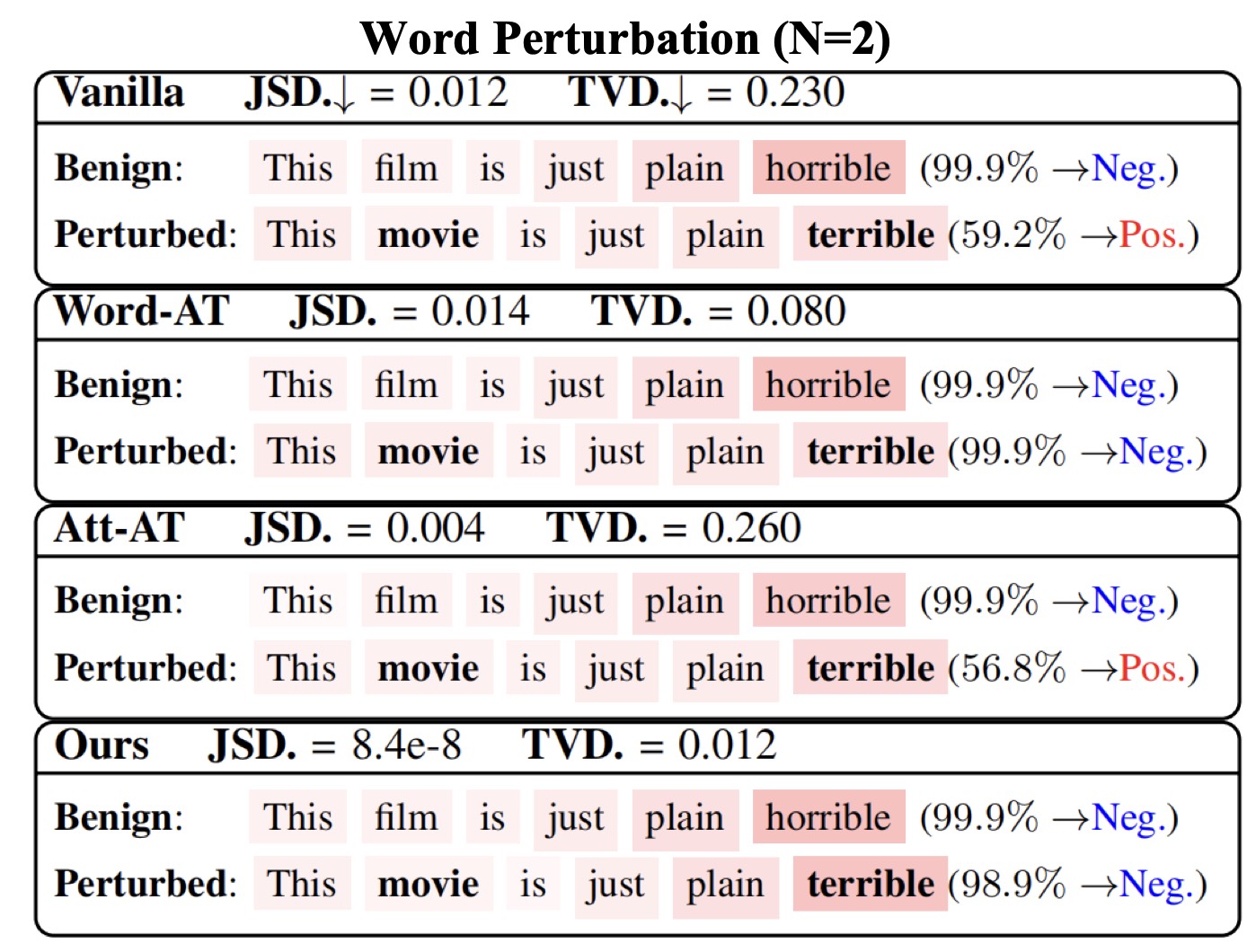

- Model Internals: Robust Explainability [SEAT (AAAI'23 Oral), FViTs (ICML'24 Spotlight)]: I study how attention mechanisms can be fragile and unfaithful when reused for explanations. Using adversarial latent-space training and diffusion-style denoising, I stabilize attention so that attention scores better reflect the model's true decision process and can be used more reliably as explanations for foundation models.

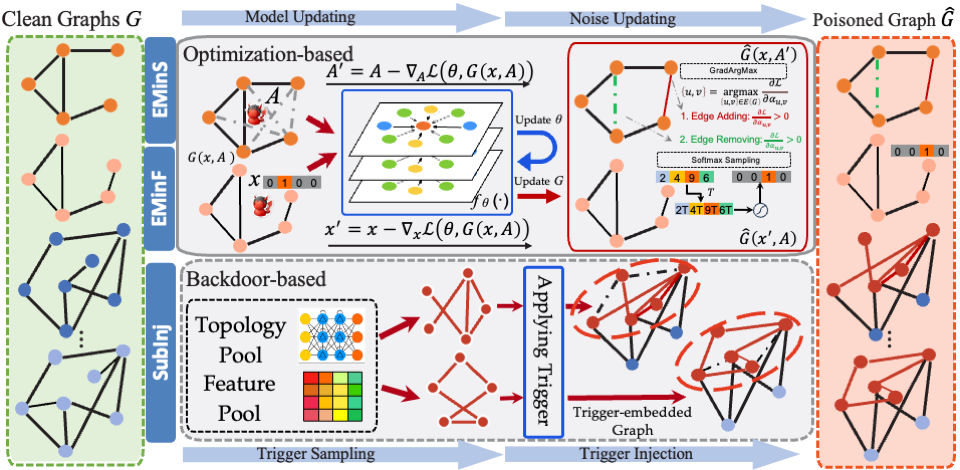

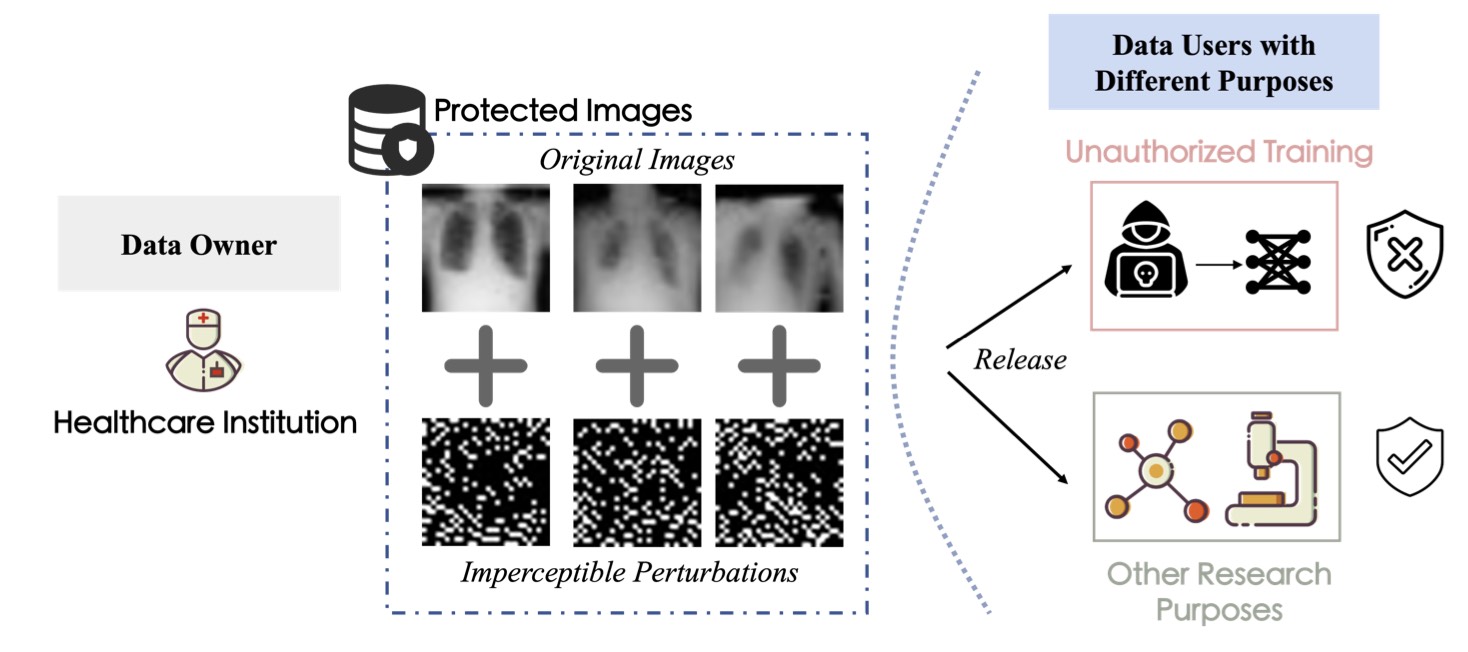

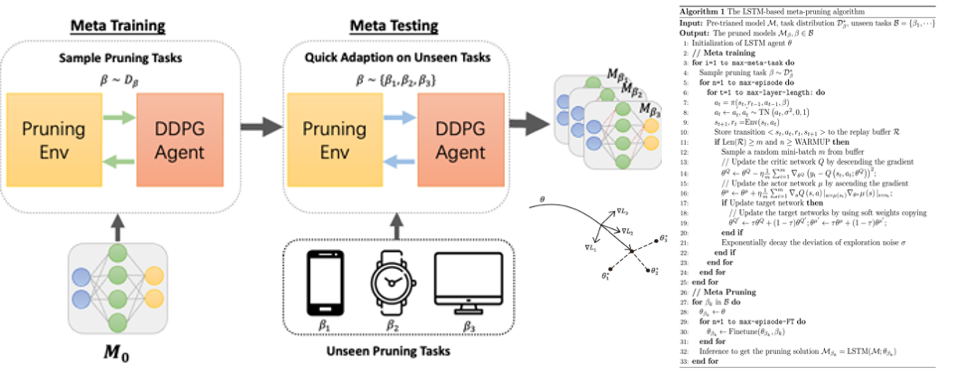

- Input Side: Training Dynamics & Data Protection [MetaCloak (CVPR'24 Oral), DiffShortcut (KDD'26), SEM (AAAI'24), EditShield (ECCV'24), MUE (ICML'24 Workshop), GraphCloak, Linear Solver Analysis]: I study training dynamics and shortcut learning, showing that models often rely on brittle shortcuts. I use this understanding in two ways: (1) designing privacy-preserving perturbations that protect user data from unauthorized training, and (2) proposing decoupled training frameworks where diffusion models remain robust even when training data is intentionally corrupted.

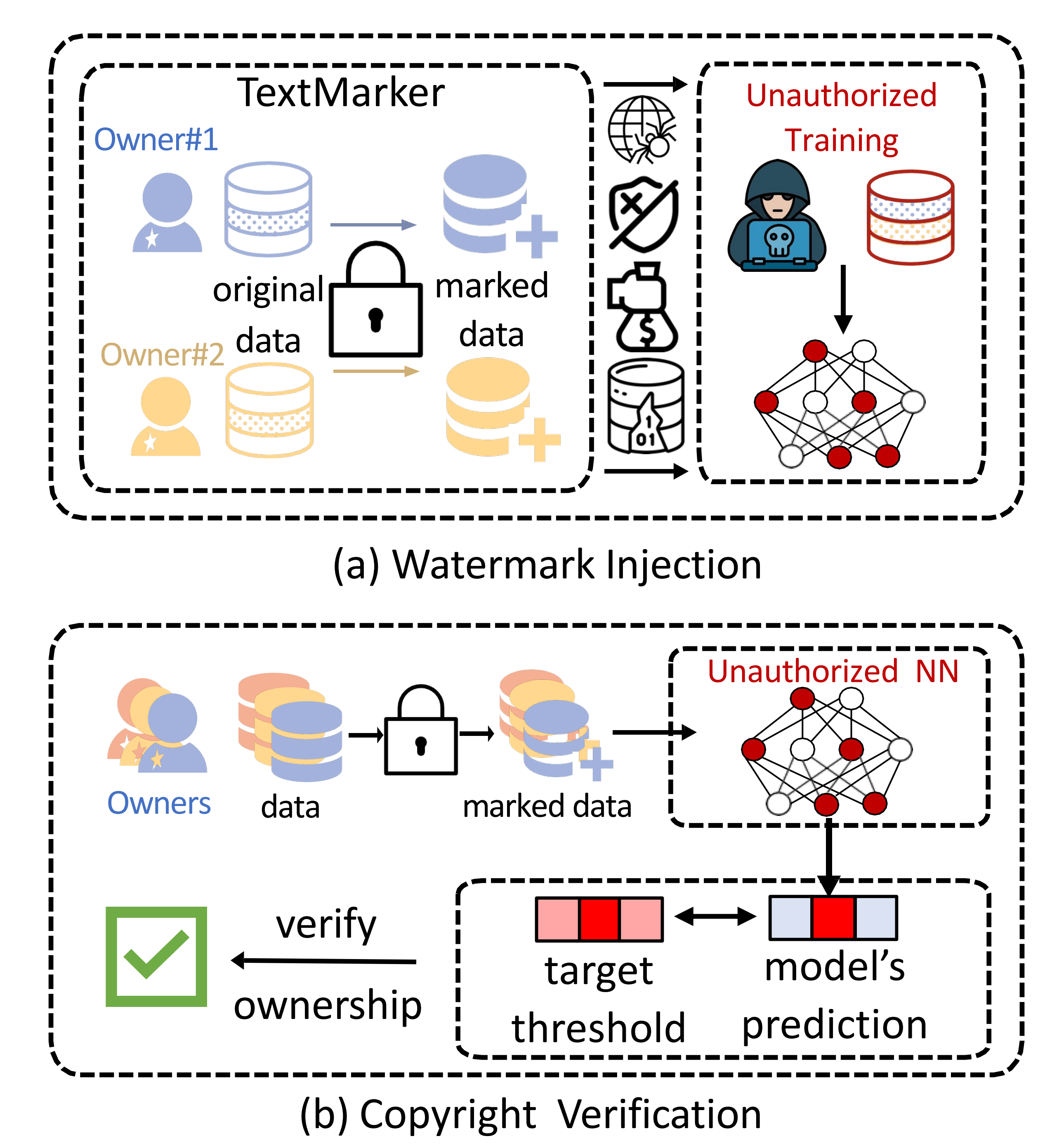

- Output Side: Content Provenance & Watermarking [XAttnMark (ICML'25), TextMarker]: I work on provenance for AI-generated content, addressing whether watermark signals can survive strong distortions, especially repeated generative editing. My watermarking architecture, combined with adversarial augmentation during training, can reliably detect AI-generated content after aggressive edits, helping maintain integrity of the overall ecosystem.

Professional Experience

- Dolby Labs - Research Intern (Sep 2024 - Apr 2025, May 2025 - Aug 2025)

Working on robust audio watermarking for content protection with Universal Music Group. Developed XAttnMark achieving state-of-the-art detection and attribution performance. - Samsung Research America - Research Intern (May 2024 - Aug 2024)

Developed graph-based RAG system for log analysis, achieving +16 comprehensiveness score improvement. Also worked on DiffShortcut for defending protective perturbations in diffusion models. - Samsung Research America - Research Intern (May 2023 - Nov 2023)

Proposed efficient defensive perturbation generation methods for data protection against diffusion models, resulting in MetaCloak (CVPR'24 Oral) and GraphCloak for graph data protection. - Lehigh University - Teaching Assistant

CSE 017 Java Programming (Spring 2023), CSE 007 Python Programming (Spring 2024)

Invited Talks & Presentations

- ICML 2025 Poster - "XAttnMark: Learning Robust Audio Watermarking with Cross-Attention" [Virtual Poster]

- Dolby Lab Tech Summit - "Robust Audio Watermarking for the Music Industry" (June 2025) [Slides]

- Microsoft ASG Research Day - "Adversarial Perturbation in Personalized Diffusion" (invited by Dr. Tianyi Chen, July 2024) [Slides]

- CVPR 2024 Oral - "MetaCloak: Preventing Unauthorized T2I Diffusion Synthesis" (June 2024) [Video] [Slides]

Reviewer Service

NeurIPS'23'24, KDD'23'25, CVPR'24'25, ICML'24'25, ECCV'24 (Outstanding Reviewer), ICLR'25, ICASSP'25, IEEE TIP

Publications ( show selected / show all by topic / show all by date )

Topics: Unauthorized Exploitation / NLP Safety / Explainable AI / Model Compresssion / Applications (*/†: indicates equal contribution.)